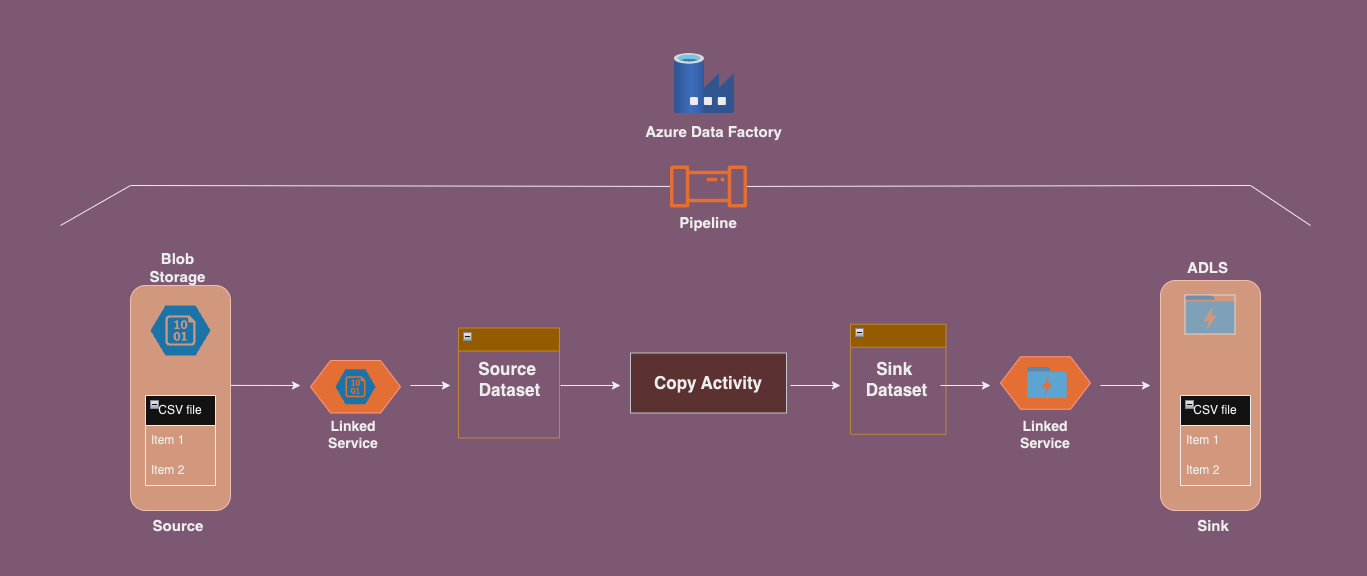

In this blog post, I will guide you through the process of copying a single file from Azure Blob Storage to Azure Data Lake Storage (ADLS) Gen2 using Azure Data Factory (ADF).

Objective:

The goal is to copy a single file (e.g., employees.csv) from a Blob Storage container to an ADLS Gen2 container using ADF.

Example:

- Source dataset: Blob Storage

- Sink dataset: ADLS Gen2

Prerequisites:

Before we dive into the steps, ensure that the following pre-requisites are met:

- Create Azure Blob Storage Account: This will serve as the source for the file you want to copy.

- Create ADLS Gen2 Account: Ensure that the ADLS account has the Hierarchical Namespace enabled.

- Create Linked Services: In ADF, create two linked services—one for Blob Storage (source) and one for ADLS (sink).

- Create Datasets: Create datasets for both the source (Blob Storage) and the sink (ADLS Gen2).

- Create a Pipeline: Set up a pipeline in ADF to handle the file transfer.

Hands-on Steps:

- Complete Pre-requisites:

- Ensure you have all the pre-requisites completed as mentioned above, including the creation of Blob Storage and ADLS accounts, linked services, and datasets.

- Set Up the Source in Blob Storage:

- In Blob Storage, create a container (e.g.,

landing) and upload the file you want to copy. For this example, we’ll useemployees.csv.

- In Blob Storage, create a container (e.g.,

- Set Up the Sink in ADLS:

- In ADLS, create a container (e.g.,

output) where the copied file will be stored.

- In ADLS, create a container (e.g.,

- Create the Source Dataset:

- In ADF, create a Source Dataset for the Blob Storage container.

- Specify the File Path as

landingand the Filename asemployees.csv.

- Create the Sink Dataset:

- Similarly, create a Sink Dataset for the ADLS container.

- Set the File Path to

outputwhere the copied file will be stored.

- Create a Pipeline:

- In ADF, create a new pipeline.

- Add a Copy Activity to the pipeline.

- Set the Source to the Blob Storage dataset (pointing to the

employees.csvfile). - Set the Sink to the ADLS dataset (pointing to the

outputcontainer).

- Debug the Pipeline:

- Use the Debug option in ADF to test the pipeline configuration.

- Check for any errors or warnings, and ensure that the file is being copied successfully.

- Test the Output:

- Once the pipeline runs successfully, go to your ADLS account and check the

outputcontainer. Theemployees.csvfile should be present in the container.

- Once the pipeline runs successfully, go to your ADLS account and check the

Conclusion:

By following these steps, you can easily copy a single file from Azure Blob Storage to ADLS Gen2 using Azure Data Factory. This process is simple but powerful, allowing you to automate file transfers between cloud storage systems.

dataset sink

dataset – source

Copy data – sink

Copy data – source