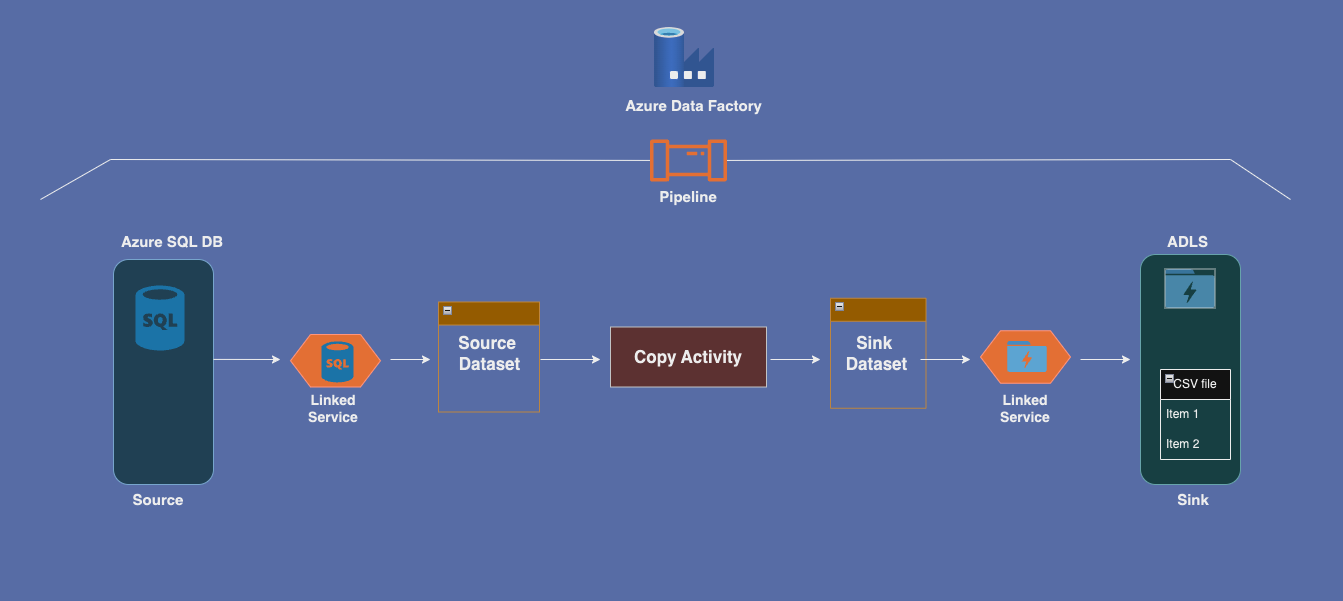

In this blog post, we’ll walk through how to copy data from an Azure SQL table to a CSV file in Azure Data Lake Storage (ADLS) using Azure Data Factory (ADF). By leveraging ADF’s Copy Activity, you can easily extract data from a SQL database and store it in a CSV format within ADLS.

Objective:

To copy data from an Azure SQL table and save it as a CSV file in Azure Data Lake Storage using a simple pipeline.

Example:

We will use a Copy Activity in ADF where the Source Dataset is an Azure SQL table, and the Sink Dataset is a CSV file in ADLS. Additionally, we’ll explore how to use a custom SQL query to filter data before copying it.

Pre-requisites:

Before you begin, ensure that the following are set up:

- Azure SQL Database: Create an Azure SQL Database with sample tables by choosing the advanced settings during setup.

- Azure Data Lake Storage (ADLS) Account: Ensure the ADLS account is set up with the Hierarchical Namespace enabled.

- Linked Services: Create two linked services in ADF – one for the Azure SQL Database (source) and one for the ADLS account (sink).

- Datasets: Create two datasets – one for the source (SQL table) and one for the sink (ADLS folder).

- ADF Pipeline: Create a pipeline in ADF to perform the data copy activity.

Hands-on Steps:

- Complete Pre-requisites: Make sure that all the necessary pre-requisites mentioned above are in place.

- Configure SQL Database as the Source:

- In ADF, create a dataset for the Source.

- Select the SalesLT.Customer table from your Azure SQL Database as the source.

- Set Up ADLS as the Sink:

- In your ADLS account, create a container (e.g.,

output) where the CSV files will be stored. - Create a Sink Dataset in ADF, and set the File Path to point to the

outputcontainer in ADLS.

- In your ADLS account, create a container (e.g.,

- Create a Pipeline:

- In ADF, create a pipeline and add a Copy Activity to it.

- For the Source settings, select the dataset pointing to the SalesLT.Customer table.

- For the Sink settings, select the dataset pointing to the output container in ADLS.

- Optional Query in Copy Activity:

- If you want to copy only specific data from the source, use a SQL query in the Copy Activity’s Source Settings. For example:

SELECT * FROM SalesLT.Product WHERE color = 'red' - This query filters the data to only copy rows where the product color is red from the SalesLT.Product table, even though the dataset points to the Customer table.

- If you want to copy only specific data from the source, use a SQL query in the Copy Activity’s Source Settings. For example:

- Debug the Pipeline:

- Once the pipeline is configured, use the Debug option to test the pipeline and ensure everything works as expected.

- Test the Output:

- After running the pipeline, check your ADLS account to ensure the data has been copied to the

outputcontainer as a CSV file.

- After running the pipeline, check your ADLS account to ensure the data has been copied to the

Dataset_SQLDB_Source

Dataset_ADLS_Sink

Copy Activity Source Settings

Copy Activity Sink Settings

How ADF Executes the Pipeline:

During the execution of the pipeline, Azure Data Factory follows these steps:

- Connection: ADF uses the connection information defined in the linked service to connect to the source database.

- Query Execution: If a custom SQL query is specified in the Copy Activity, ADF runs the query on the database.

- Data Extraction: ADF fetches the data based on the query (or the default table if no query is provided) and transfers it to the sink.

For example, if you provided the SQL query SELECT * FROM SalesLT.Product WHERE color = 'red' in the Copy Activity, ADF will fetch the data from the Product table instead of the Customer table that is defined in the source dataset.

Key Takeaway:

The table defined in the Source Dataset is used only when no custom query is provided in the Copy Activity. When a query is provided, ADF uses the query to fetch data, and the dataset’s table configuration is ignored.

By following these steps, you can successfully copy data from an Azure SQL table to a CSV file in ADLS. This pipeline configuration allows you to either copy the entire table or use SQL queries to filter the data, making the process flexible and efficient.