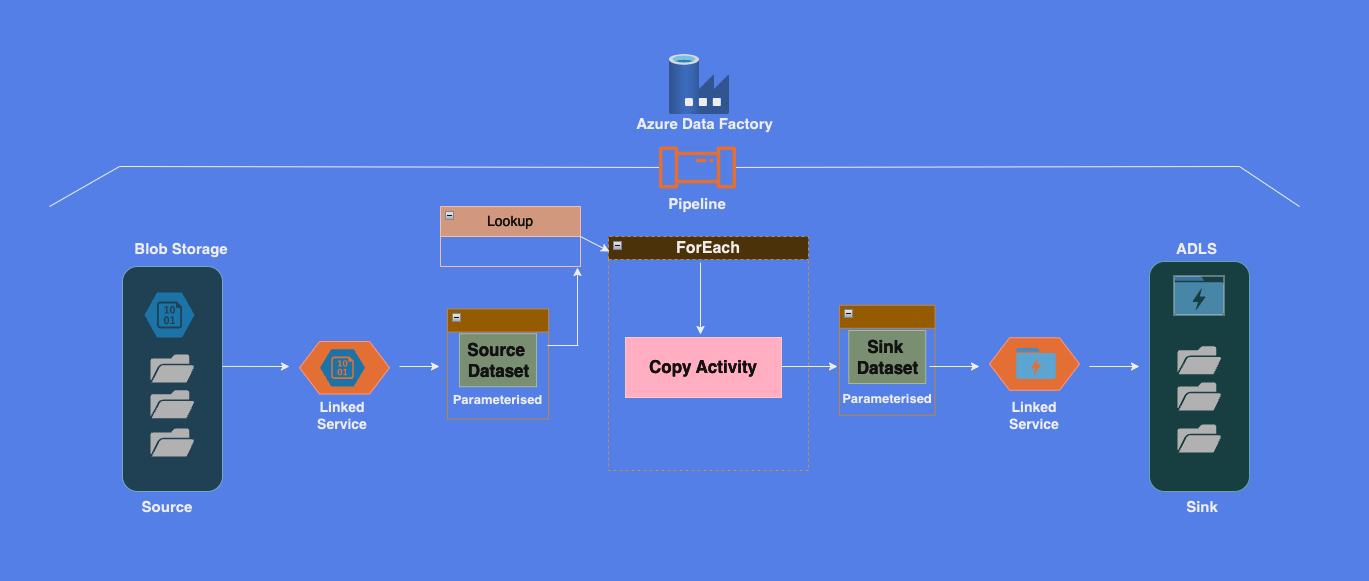

In this post, I will walk through how to automate the process of copying multiple files or folders in Azure Data Factory (ADF) using Lookup and ForEach activities. This approach allows dynamic copying of files from Azure Blob Storage to Azure Data Lake, simplifying workflows with flexible automation.

Scenario Example:

You have a control file (e.g., controlfile.csv) stored in Azure Blob Storage that contains a list of directories (e.g., customer, product). The Lookup activity reads this control file and passes the list of directories to a ForEach activity. Inside the ForEach activity, a Copy activity dynamically copies each directory from Blob Storage to Azure Data Lake, based on the parameters provided.

Pre-requisites:

To follow along, ensure you have the following set up:

- Azure Blob Storage account: To store and manage your files.

- Azure Data Lake Storage (ADLS) account: Blob storage with hierarchical namespaces enabled.

- Azure Data Factory (ADF): For creating and running pipelines.

- Linked services in ADF: Create two linked services (source and sink) that define your connection to Blob Storage and ADLS.

- Control File: Create a

controlfile.csvthat contains the source and destination containers.

Here’s an example structure for the control file:

Source_Container,Sink_Container

customer,custout

orders,orderout

product,productout

Once this is ready, copy the controlfile.csv to the metadata folder in the source Blob Storage container.

Hands-on Steps:

- Complete Pre-requisites: Ensure all the prerequisites mentioned above are set up.

- Create Source Containers: At the source side, create containers like

customer, order, and product. Upload your CSV files into the respective folders within the containers. - Parameterised Dataset for Source:

- Create a parameterised dataset for the Source (Blob Storage) in ADF.

- File path and Filename should not be selected during dataset creation.

- Go to the Parameters tab and create a new parameter, e.g.,

ds_param_source_container. - In the Connection tab, click on the Container dropdown, add dynamic content, and select

ds_param_source_container. This will dynamically populate the container value during pipeline execution.

- Parameterized Dataset for Sink:

- Similarly, create a dataset for the Sink (Azure Data Lake).

- In the Parameters tab, create a new parameter, e.g.,

ds_param_sink_container. - In the Connection tab, use dynamic content to set the container value to

ds_param_sink_container.

- Create a Pipeline with Lookup Activity:

- Create a pipeline in ADF and add a Lookup activity (e.g.,

LookupControlFile). - Configure the Source dataset as the control file dataset, pointing to your

metadatafolder. - Unselect First row only and configure the dataset to read all rows.

- Create a pipeline in ADF and add a Lookup activity (e.g.,

- Add ForEach Activity:

- Add a ForEach activity after the Lookup.

- Set its Items property to

@activity('LookupControlFile').output.value, which contains the array of file details from the control file.

- Inside ForEach: Copy Activity:

- Inside the ForEach activity, add a Copy activity to handle file copying.

- In the Source settings, configure the Source dataset to use the dynamic parameter

@item().Source_Container. - Similarly, for the Sink settings, use the dynamic parameter

@item().Sink_Container.

Key Pipeline Settings:

- Source Dataset:

- File Path (Container):

@dataset().ds_param_source_container - File Name:

*(to select all files in the specified directory/container)

- File Path (Container):

- Sink Dataset:

- File Path (Container):

@dataset().ds_param_sink_container - File Name:

*(to copy all files in the specified directory/container)

- File Path (Container):

- Lookup Activity:

- Source dataset:

<select source dataset> - Dataset Properties:

ds_param_source_container = metadata - Wildcard file path:

* - File Name:

*

- Source dataset:

- ForEach Activity:

- Items:

@activity('LookupControlFile').output.value

- Items:

- Copy Activity (Source Settings):

- Dataset properties

ds_param_source_container = @item().Source_Container - Wildcard paths:

*

- Dataset properties

- Copy Activity (Sink Settings):

- Dataset properties

ds_param_sink_container = @item().Sink_Container - Wildcard paths:

*

- Dataset properties

Testing the Pipeline:

After configuring the pipeline, debug and run it. Test whether the folders/containers & respective files are copied correctly from the source containers to the destination containers based on the configurations in the controlfile.csv.